Case Study: How we migrated Java selenium tests to Python robot framework

Background: Recently we worked on a project to migrate around 810+ cases which were written in java+testng+maven+jenkins stack to python+robotframework+gitlab stack.

Stakeholders wanted to switch to Python and robot framework due to the following reasons:

- robot is keyword-driven so it is easier for even manual QA with no coding background to write test

- python being the development language, automation code can sit with development code and make pipeline integration easier

- since the robot already has a wrapper defined writing new tests takes less timeless code once you are proficient in this

- robot supports gherkin and plain English language so with auto-suggest enabled QAs can write tests without much help from others

- Developers can write backend tests themselves

Process:

When I looked into Java code, I knew there were a lot of things where we were testing things in the wrong way, after analyzing the whole code, I found below things that would need improvements->

- Low API/Backend Coverage(solution: In Java all the coverage was on GUI, even simple calculation changes such as calculating pricing for the product for the user are checked via frontend assertion, and if somehow the frontend does not use API which is useful for async actions or cron calls then those APIs were never tested.

This is the first thing I did, in the first 3 months, I focused on regress API coverage, testing all the endpoints, all the payloads, and, all the flows possible through API, we wrote 180+ tests but since API results were a faster and stable lot of the tests from gui automatically become irrelevant.

Testing APIs is pretty simple in robot, you could test in a single line if your API assertion does not have a big schema as they have a builtin status code assertion

${response} Post On Session product_session ${end_point} headers=${headers} json=${payload}

2. Duplicate Code (solution enums, optional arguments, default arguments): One of the biggest issue I saw was a lot of code duplication, as a user I need to go to section “D” from section “A” during user journey, we were always using the entire flow to reach with step by step code

Rather than using the same code again and again, using enums as different steps is a common generic method to reach wherever needed in a single saving tens of lines of code

Sample-> ProceedTill(fromStep==A, toStep==D)

this can cover all cases such as A-B, B-D, B-C, A-C, C-D) and so on saving 100s of lines of code on multiple test cases.

3. No Data Driven Testing(solution- Test Template): One of biggest issue was java code did not had data driven testing so we end creating multiple tests with 100s of lines repeating 90% of the code just to write 10% of the flow differently.

For example, -> BuilderAI Studio Product has six kinds of build card types(Coded words- store,pro,pp,tm,sp,fm) and 20 different currency support for payment then creating a test matrix for that kind of flows would produce around 120 cases->

that means we end up creating so much duplicate code and chances are you may miss some flows too but with the “Test Template” Feature in robot data-driven testing is real fun

you could just create a simple method with two arguments ${card_type} and ${currency_code} and then pass it as different values to create test cases in a single line reusing all code except two lines where you are saying :

if ${card_type}==”ss”

then perform ss-related action and so on

if ${currency_code}==${currency_code.INR}

then select INR as the currency

That’s it and you end up creating all 120 tests with the same code in a single line like this:

Test Template Verify Build Card Creation ${card_type} ${currency_code}

Tests

Verify SS Card Creation ${card_types.ss} ${currency_code.INR}

Verify SS Card Creation ${card_types.sp} ${currency_code.INR}

Verify SS Card Creation ${card_types.ss} ${currency_code.USD}

Verify SS Card Creation ${card_types.sp} ${currency_code.USD}

4. No Component Testing(solution deep links, Atomic Tests): By looking at the code it was visible, test pyramid concepts were missing here, If we have 10+ pages in an application where 10 is the last page and 1 is the first, we were going to 1-10 pages in sequence to verify some components of 10th page, this was causing a lot of flakiness in tests also consuming a lot of time and code in execution side.

To solve this I introduced component testing: Using API filters to fetch data from the backend run and then use that data in the front end combining deep links.

For example, if the URL of the 10th page looks like the below->

then from backend run, we had tons of unique ids in DB, I used api filters to fetch latest data(which was created in last 2 hours) to append that in URL and then with simple format function to replace that in deep link url, in this way after login(which is done through cookie injection as well) we end up going on page 10 directly without having having to other 9 pages saving tons of lines of code and execution time (brilliant right i know that’s the power of deep links and component testing mixing with API filters)

sample code->

@keyword

def get_ongoing_unique_id(self,username):

card_json_array={}

unique_id=”no id found”

payload={“email”:username,”password”:os.environ.get(‘app.password’)}

response=requests.post(os.environ.get(‘app.api.loginapi’),data=payload)

header={“token”:response.json()[”token”],”content-type”:”application/json”}

response=requests.get(os.environ.get(‘app.api.ongoing’),headers=header)

assert response.status_code==200

json_array=response.json()[‘unique_ids’]

for item in json_array:

if item[‘card_type’]==’ss’:

unique_id=item[‘unique_id’]

break

return unique_id

This approach made sure our tests were atomic and we were testing what we wanted to test rather than unnecessary flows.

5. Bad Code Structure & Readability(Solution: Moving to atomic tests with Gherkin Support): In Java code was following the test script using page objects and page actions without following proper atomic tests flow with user journey verifications

A Code Sample from Java Code to download pdf file from the card menu:

fromPageFactory.Login()

fromPageFactory().goToHome()

fromPageFactory.clickGoButton()

fromPageFactory().clickBuildNowBtn()

fromPageFactory.clickNext()

fromPageFactory().typeCardDetails()

fromPageFactory.clickOnMenuOption()

fromPageFactory().clickOnPdfDownload()

while similar code is written in robot with built-in gherkin support:

Author : Chandan | Scenario: Verify that pdf download is working correctly on build card page

[Tags] component high pdf buildcard regression

Given User is on buildcardpage

When User clicks on menu option ${build_card_menu_options.DownloadPDF}

Then PDF should be generated successfully

much more clear and readable right?

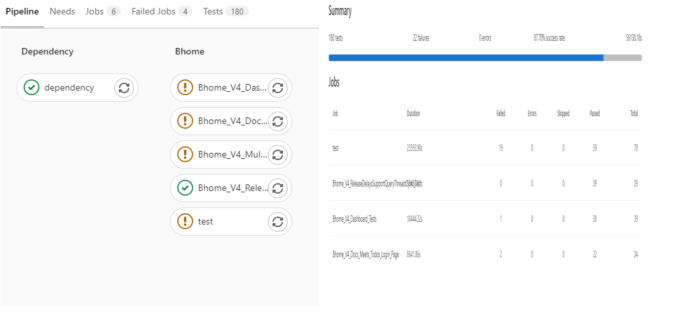

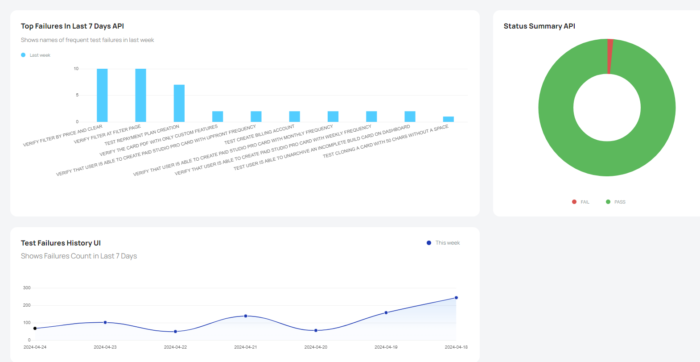

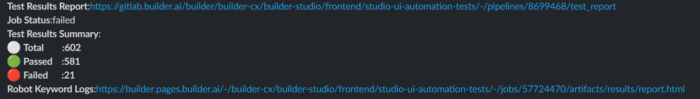

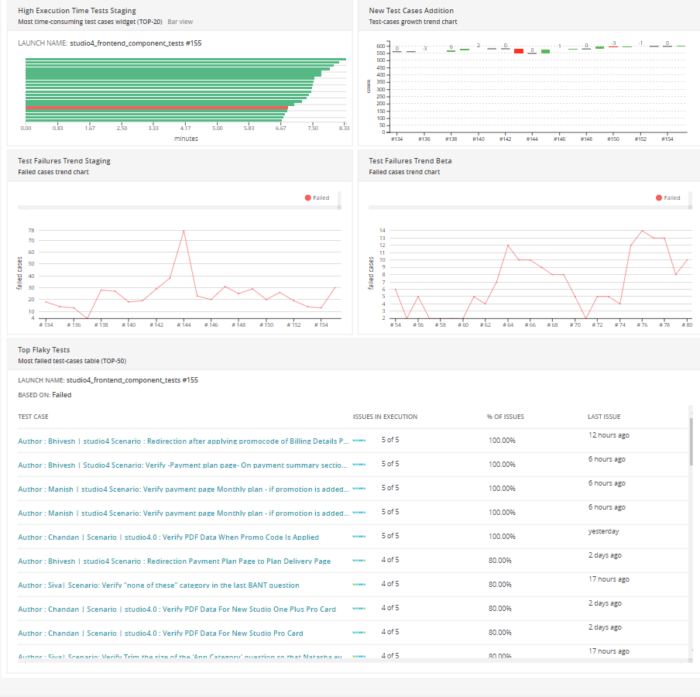

6. No Metrics & Analysis On Previous Results( Solution: Django Dashboard, Gitlab Pipeline Reports, Report Portal, Grafana Dashboard, Realtime alerts, Slack hooks): Making decision-based on a test run is the most important part, without easier debugging clear reports and data-driven based decisions, we were unable to find which tests to concentrate and focus and spending days to fix issues or analyze issues

We started to dump our results in the database to understand the behavior, it also helped to move those java tests first which were flaky therefore not disturbing or missing automation sign-off while development on the robot python project was in progress.

Having clear reports and clear errors helped us to focus on failures and fix and report them quickly compare to java where analyzing them was taking a lot of time since we were only dependent on current day results from html files.

Having a combined view of multiple products also helped us to follow up with the concerned team member which was a difficult task in testng(merging multiple HTML files through emails)

A sample of some of the reporting changes done by us is attached as an image.

Results of all this was->

- 1.We were able to execute 301k test runs compared to 71k (in java)(increased by 40%)

- 2.We removed 800+ java cases and moved them to robot(60 pending ones which were obsolute),

- 3. We migrated 4 years of work in java to robot in under 6 months that’s no joke right :D.

- 4. we have added 1800+ new tests in the robot not just moving java ones, but adding missing tests too with the help of a data-driven approach.

- 5. We were able to raise around 153 issues compared to 65 in (java)

- 6. Flakiness in working tests has been reduced from 15% to 4.5% per product(thanks to atomic tests and component tests)

- 7. Test Execution time has been reduced from 3 hours to under 30 mins per product (can be reduced more if platform performance improves, we just need to increase thread count :D)

Hajj hypotheses localizable [URL=https://pureelegance-decor.com/product/trial-ed-pack/ – lowest price for trial ed pack[/URL – trial ed pack from india [URL=https://youngdental.net/drug/fildena/ – generic fildena discount[/URL – [URL=https://transylvaniacare.org/drugs/nizagara/ – nizagara lowest price[/URL – [URL=https://endmedicaldebt.com/product/nizagara-com/ – nizagara[/URL – [URL=https://myhealthincheck.com/udenafil/ – no prescription udenafil[/URL – [URL=https://sci-ed.org/nitrofurantoin-online/ – nitrofurantoin[/URL – [URL=https://breathejphotography.com/drug/carbidopa/ – carbidopa 125mg[/URL – [URL=https://productreviewtheme.org/tazorac/ – tazorac capsules for sale[/URL – [URL=https://computer-filerecovery.net/item/spiriva/ – buy cheap spiriva on line[/URL – [URL=https://sunsethilltreefarm.com/item/vpxl/ – buy vpxl online fast delivery[/URL – [URL=https://frankfortamerican.com/alesse/ – alesse online[/URL – [URL=https://exitfloridakeys.com/item/xtandi/ – cheapest xtandi dosage price[/URL – [URL=https://endmedicaldebt.com/item/ilaxten/ – ilaxten online nz[/URL – [URL=https://cassandraplummer.com/tadasiva/ – generic tadasiva in canada[/URL – [URL=https://weddingadviceuk.com/product/prednisone/ – prednisone on internet[/URL – [URL=https://transylvaniacare.org/eriacta/ – eriacta[/URL – [URL=https://productreviewtheme.org/valacyclovir/ – buy valacyclovir paypal payment[/URL – [URL=https://cassandraplummer.com/drugs/symbicort-turbuhaler-60md/ – symbicort-turbuhaler-60md best buy uk[/URL – [URL=https://andrealangforddesigns.com/tivicay/ – tivicay[/URL – [URL=https://drgranelli.com/fosfomycin/ – canadian fosfomycin[/URL – [URL=https://cubscoutpack152.org/item/videx-ec/ – videx ec from india[/URL – [URL=https://weddingadviceuk.com/product/adcirca/ – adcirca coupon[/URL – [URL=https://frankfortamerican.com/item/chloroquine/ – generic drugs chloroquine[/URL – [URL=https://productreviewtheme.org/tamiflu/ – on line tamiflu[/URL – [URL=https://myhealthincheck.com/drugs/ivermectol/ – ivermectol[/URL – [URL=https://bhtla.com/iversun/ – iversun[/URL – [URL=https://youngdental.net/drug/cenforce-d/ – cenforce-d[/URL – [URL=https://myhealthincheck.com/melatonin/ – generic melatonin[/URL – [URL=https://frankfortamerican.com/prednisone-10-mg-dose-pack/ – prednisone forte[/URL – [URL=https://breathejphotography.com/viagra-100mg/ – viagra buy in canada[/URL – specifics widens trial ed pack prices fildena canada buying nizagara nizagara.com order nizagara udenafil.com nitrofurantoin carbidopa online no script tazorac canadian pharmacy generic spiriva in canada spiriva best price best price vpxl alesse lowest price buy alesse online buy xtandi no prescription ilaxten tadasiva coupons prednisone eriacta prices generic eriacta tablets valacyclovir in india by mail order generic symbicort turbuhaler 60md tivicay from india online buy site fosfomycin videx ec from india videx ec adcirca low cost chloroquine tamiflu price walmart ivermectol iversun buy cenforce-d mail order melatonin single dose prednisone price of viagra sclera https://pureelegance-decor.com/product/trial-ed-pack/ trial ed pack canada https://youngdental.net/drug/fildena/ https://transylvaniacare.org/drugs/nizagara/ https://endmedicaldebt.com/product/nizagara-com/ https://myhealthincheck.com/udenafil/ https://sci-ed.org/nitrofurantoin-online/ https://breathejphotography.com/drug/carbidopa/ https://productreviewtheme.org/tazorac/ https://computer-filerecovery.net/item/spiriva/ https://sunsethilltreefarm.com/item/vpxl/ https://frankfortamerican.com/alesse/ https://exitfloridakeys.com/item/xtandi/ https://endmedicaldebt.com/item/ilaxten/ https://cassandraplummer.com/tadasiva/ https://weddingadviceuk.com/product/prednisone/ https://transylvaniacare.org/eriacta/ https://productreviewtheme.org/valacyclovir/ https://cassandraplummer.com/drugs/symbicort-turbuhaler-60md/ https://andrealangforddesigns.com/tivicay/ https://drgranelli.com/fosfomycin/ https://cubscoutpack152.org/item/videx-ec/ https://weddingadviceuk.com/product/adcirca/ adcirca https://frankfortamerican.com/item/chloroquine/ https://productreviewtheme.org/tamiflu/ tamiflu generic pills https://myhealthincheck.com/drugs/ivermectol/ https://bhtla.com/iversun/ https://youngdental.net/drug/cenforce-d/ https://myhealthincheck.com/melatonin/ https://frankfortamerican.com/prednisone-10-mg-dose-pack/ https://breathejphotography.com/viagra-100mg/ desiring dive oculi, required.