Case Study: How I Reduced Appium Test Execution Time By More Than 50%

Recently, I spent my time in improving test execution time for our kredivo mobile application automated tests and by doing certain activities during refactoring and implementing certain strategies, I was able to decrease our test suite execution time by more than 50%.

Before Making Improvements-

Total Tests Count- 287, Total Time Taken During Execution-240.532 minutes

After Making Improvements-

Total Tests Count-228, Total Time Taken During Execution-113.339 minutes.

You may ask why a decrease in test cases count right? You will get the answer by the end of this article.

Before I start discussing improvements, Let me tell you our set up details-

Platform- iOS and Android(Using Appium For both)

Devices-Using browserstack cloud device services to run tests on real devices where some random device is allocated automatically during execution based on device parameters, For now, we have 5 Devices plan so parallelly we are running 5 tests at a time.

Programming Language: Java with Shell Scripting For Clean-Up Activities.

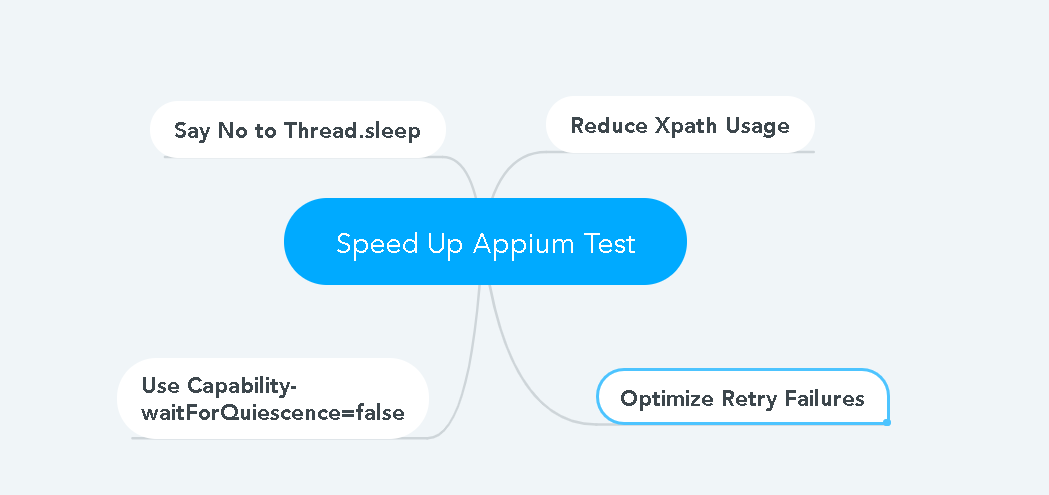

List of improvements which I did to achieve faster tests execution are listed below:

1. Minimize Xpath usage– search elements by Id(Android) or AccessibilityID(iOS), this seems to be a small issue as the difference lies in few milliseconds to few seconds but when you are dealing with more than 1000 elements in all your tests then this becomes a vital issue especially on ios tests where AccessibilityID is much much faster compared to XPath. No excuses here, work with devs to add id and AccessibilityID in your application, I asked my iOS dev to add AccessibilityID even though it was not present earlier.

2. Optimize Retry Failures: This was the second biggest culprit, We were using a retry failure listener where failed tests were retried once before marking it a failure due to flakiness in tests though we were capturing intermediate failures too in the report, the problem with that approach was when there is a genuine failure then we lose out time because in case of genuine failure retries were not needed.

To optimize this, I analyzed the test cases execution pattern for the last 3 months as we were dumping our test results in DB and then I found out which tests were flakier compared to others. After carefully analyzing the results I customized retry listener where by default retry count was 0 but for certain tests only which seemed flaky, the count was 1, the list of test cases are captured from DB based on last 3 months data using a dynamic SQL query at a run time so that I don’t have to do this activity manually again and again.

3. Say Hard No To Thread.sleep: This was the third biggest culprit in our tests in terms of consuming execution time, there was a lot of sleep waits used by my team members to achieve test stability, while test does become stable with this approach but it has a big cost in terms of execution time as with each sleep we were losing time ranging from 5 seconds to 30 seconds depending upon test cases. To fix this, I created a custom await type of wait which was making continuous calls to check something on the DB/external system which can’t be handled by explicit waits.

sample- await().atMost(5, SECONDS).until(statusIsUpdatedInDB());

4. Use Multiple Types Of Explicit Wait: For most of the appium driver element related tasks, we were using explicit wait but the problem with having a single explicit wait only, when elements are not present or test fails due to element not found error, it consumes the maximum time given during declaration. To fix this, I created multiple explicit wait types and refactored tests to use them based on requirement-

Long wait- 30 seconds- Only added this wait while waiting for the new element on a new page as new page load takes time

Short wait- 10 seconds- added this wait while waiting for some elements which depend on API call once the page is loaded, any API taking more than 10 seconds were reported as a bug.

Minimal wait- 2 seconds- Added this wait for those elements which were not dependent on API but on app logic(something was hidden and displayed once the user performs some action). This wait can also be used for optional elements(an element that may or may not appear).

No wait-0 seconds- Added this wait for those elements which were loaded as soon as page loads, for example, buttons, labels so the first element depends on long wait and after that, all elements on that page were refactored to use no wait unless it involves some app or API steps.

5. Focus On Test Cases Coverage Rather Than Test Cases Count: This is where test execution time improved the most, There were a lot of duplicate test cases were present in our test suite for example login was part of multiple tests but still, we had login test running separately, why would you want to test something again and again, if login will fail any way you get to know from other login dependent tests.

similarly, there were tests on social media where we need to test social media pages such as Youtube, Twitter, Facebook, and Instagram, Now we had 4 individual tests for them while this can be achieved in the single test as these tests are static and can be tested at once using back button function in the device since we are already in the social media section and we just need to verify single social media tests, go back and perform different one until all social media tests are completed.

So due to this, we noticed a reduction in the test cases count but now saved a lot of time in appium driver set up, app installation, app invocation, and duplicate test steps, it was completely worth it.

We saved the maximum time in this improvement activity, around 45-50 minutes were saved using this approach and that is why you see a reduction in the test cases count during the early part of this article.

6. Every Command To Appium Server Is Costly, Think Before Using Each Command: One of the prime reason why appium is slower compared to xcuitest and android expresso is that it follows a client-server approach and while code reusability is there but each appium command cost time that is why you need to be careful while making any appium commands, always try to use different way if possible rather than using appium commands in your tests. Use API/DB calls wherever possible to achieve things which were already verified from mobile app UI in some other tests, your focus should be testing UI components only once and if they are working once then for the next time or for other tests you can make use of API/DB calls.

7. Use In-Memory Caching Wherever Possible: This is also related to point #6 Since each appium command involves a time cost, On application pages where there are a lot of elements especially textview components where you need to extract text and verify content (we have payment tests where after payment we need to verify multiple prices, tenure, and item list), rather than making singular find element command for each text view elements you can make a find elements command once to get all the textview components and then verify textview components text using indexing. This approach is only recommended on those screens where there are a lot of elements are present as time difference would be very less if elements are less.

8. Use Deeplinks: Deeplinks are generally used in marketing campaigns whereby clicking those links, the user is redirected to a particular page if the app is already present in the mobile device, in test automation too we can make use of this and save a lot of execution time which is spent in the steps while reaching to that particular page, one example of this would be after login I would go directly go to deals section of my app using “appname://deals” deep-link to execute deals related tests without wasting time in clicking menu->deals and so on.

9. Other Small Improvements: Apart from the above improvements, I also made many other improvements such as reducing timeout value on browserstack, adding waitForQuiescence=false capability, using app switch if elements rendering takes a lot of time, using setValue instead of sendkeys wherever possible, using wait interval in Millis rather than in seconds if needed between element clicks(sometimes execution was too fast causing race condition)

This improvement did not make much impact but still, a difference of 3-5 minutes was visible due to this.

So, After Using all these improvements, We saved more than 50% time in test execution and utilized that time by running two test suite iterations in the day time which was not possible earlier as all the tests were running only in nightly builds due to high execution time.

Did you like these improvements? leave your feedback in comments.